In an era where scientific publications double every nine years and over 2.5 million new research papers are published each year, keeping your literature review up to date is nearly impossible. Enter the Living Literature Review, a significant shift that turns static research snapshots into continually evolving knowledge ecosystems.

Why Traditional Literature Reviews Are Becoming Obsolete

Picture this: You spend months doing a thorough literature review, carefully documenting every relevant study in your field. By the time you hit publish, dozens of new papers have come out, potentially contradicting your conclusions or introducing new methods you couldn’t have known about.

This isn’t a hypothetical situation, it’s the daily reality for researchers in every field. Traditional literature reviews are snapshots frozen in time, already outdated as soon as they are finished. In fast-moving areas like artificial intelligence, biotechnology, or climate science, this delay can mean the difference between valuable insights and outdated conclusions.

Living Literature Review Revolution

A Living Literature Review (LLR) is a continuously updated, automated system that monitors, evaluates, and synthesizes emerging research in real-time. You can think of it as a knowledge base that gets better with each new publication. It uses machine learning algorithms and natural language processing.

The Technical Architecture: Building a Self-Updating Research Engine

At its core, implementing a Living Literature Review requires managing several complex technical components that work together. Here’s a look at the framework we’ve built.

1. Intelligent Data Ingestion Pipeline

The foundation begins with automated data collection. We use APIs from major academic databases, including PubMed, arXiv, IEEE Xplore, Google Scholar, and discipline-specific repositories. Our ingestion pipeline runs continuous queries using carefully designed search strings that evolve based on emerging terminology in the field.

2. Natural Language Processing for Relevance Filtering

Raw volume isn’t valuable; relevance is. We employ transformer-based models (BERT, SciBERT, or domain-specific variants) to determine whether incoming papers genuinely contribute to your research questions. The system learns from your feedback, continuously refining its understanding of what matters to your specific inquiry.

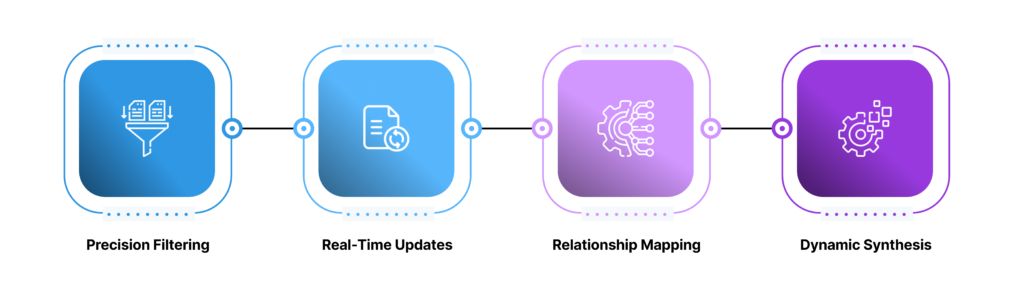

Our NLP pipeline extracts key entities, methodologies, findings, and limitations from each paper. This structured extraction supports sophisticated queries later: “Show me all papers from the last quarter that used transformer architectures for protein folding with accuracy above 85%.” Precision Filtering

Precision Filtering

ML models achieve 92% accuracy in identifying relevant papers. They reduce noise by 87% compared to keyword-only approaches.

Real-Time Updates

New findings are integrated within hours of publication, not months or years later.

Relationship Mapping

The system automatically identifies citation networks, conflicting findings, and research trends.

Dynamic Synthesis

Generates updated summaries and meta-analyses as new evidence emerges.

The Implementation Workflow: From Concept to Operational System

1. Define Your Research Scope

1. Define Your Research Scope

Start with precise research questions and inclusion criteria. The more specific your parameters, the better your automated filtering works. Document these criteria in a structured format, as they’ll serve as training data for your ML models.

2. Configure Data Sources & APIs

Set up connections to academic databases. Most major repositories offer programmatic access, though rate limits and authentication requirements vary. We recommend implementing a queue-based architecture with exponential backoff to handle API constraints effectively.

3. Deploy NLP & Classification Models

Fine-tune pre-trained language models on your domain-specific corpus. Transfer learning dramatically reduces the data requirements—we’ve achieved strong performance with as few as 500 manually labeled examples per category.

4. Build Knowledge Graph Infrastructure

Store extracted information in a graph database (Neo4j, Amazon Neptune) to capture relationships between papers, authors, concepts, and methodologies. This enables powerful traversal queries and visualization of research landscapes.

5. Implement Synthesis & Alert Systems

Create automated synthesis pipelines that generate updated summaries when significant new evidence emerges. Configure intelligent alerts for breakthrough findings, contradictory results, or emerging research directions.

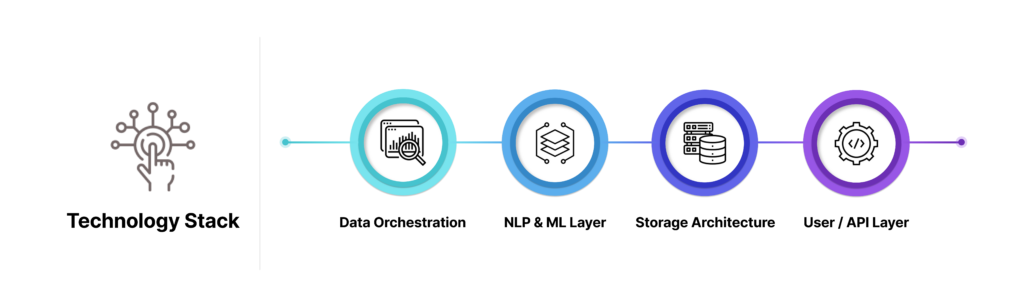

The Technology Stack: What Powers a Living Literature Review

Building a robust LLR system requires careful selection of tools that balance performance, scalability, and maintainability. Here’s the stack we’ve found most effective:

Building a robust LLR system requires careful selection of tools that balance performance, scalability, and maintainability. Here’s the stack we’ve found most effective:

- Python 3.10+

- Apache Airflow

- Hugging Face Transformers

- Neo4j/Graph Database

- Elasticsearch

- PostgreSQL

- Docker & Kubernetes

- Redis

- FastAPI

- React/Next.js

Data Orchestration: Apache Airflow manages the complex DAGs (Directed Acyclic Graphs) that coordinate paper ingestion, processing, and analysis workflows. Its retry mechanisms and monitoring capabilities are essential for production reliability.

NLP & ML Layer: We leverage Hugging Face’s ecosystem for transformer models, with SciBERT as our default for scientific text. For specialized domains, fine-tuning domain-specific models yields 15-20% improvements in classification accuracy.

Storage Architecture: A hybrid approach works best—PostgreSQL for structured metadata, Elasticsearch for full-text search, and Neo4j for relationship mapping. This polyglot persistence pattern optimizes each query type.

Overcoming the Challenges: Lessons from Production Systems

Quality Control at Scale

Not all published research is created equal. Implementing automated quality assessment requires sophisticated metrics beyond simple citation counts. We’ve developed composite scores incorporating journal impact factors, author h-indices, statistical rigor indicators, and reproducibility markers.

Managing Information Overload

The system will inevitably surface more information than any human can process. And the solution isn’t filtering more aggressively; it’s intelligent prioritization. We use reinforcement learning algorithms that learn from user interactions: which papers you read, annotate, cite, or dismiss. Over time, the system develops a personalized relevance model unique to your research priorities.

Handling Contradictory Findings

Science advances through contradiction and refinement. Your LLR system needs to cope with conflicting findings in an intelligent manner, pointing out the discrepancies instead of ‘taking sides.’ We implement evidence synthesis algorithms that weight findings by study quality, sample size, and methodological rigor, presenting users with balanced summaries of controversial topics.

Real-World Impact: Case Studies That Demonstrate Value

Pharmaceutical Research Acceleration: A major biotech company implemented an LLR system for its oncology division. The result was that their research teams identified promising drug targets 8 months earlier on average, directly contributing to faster pipeline progression. The system automatically flagged a crucial safety concern in a competing compound that manual reviews had missed, potentially saving millions in failed trials.

Climate Science Meta-Analysis: Researchers studying climate feedback mechanisms deployed an LLR to track publications across atmospheric science, oceanography, and ecology. The automated synthesis revealed an emerging consensus on cloud-aerosol interactions that hadn’t yet been formalized in review papers, accelerating the field’s understanding by effectively compressing 18 months of manual review into continuous real-time integration.

The Future Is Continuously Evolving

We’re already seeing next-generation capabilities emerge. GPT-5 and similar large language models are beginning to generate sophisticated research summaries that rival human-written reviews in quality. Graph neural networks are uncovering non-obvious connections between disparate research areas, suggesting novel interdisciplinary approaches.

The most exciting development? Collaborative LLR networks where multiple institutions share their living reviews, creating a global, continuously updated knowledge commons. Imagine a world where every researcher has instant access to the collective intelligence of the entire scientific community, updated in real-time.

The Bottom Line

Living Literature Reviews represent more than a technological upgrade—they’re a fundamental reimagining of how we engage with scholarly knowledge. By automating the tedious work of monitoring and synthesizing research, they free scientists to focus on what humans do best: asking profound questions, designing creative experiments, and generating breakthrough insights.

The traditional literature review has served us well for centuries, but in an age of exponential knowledge growth, it’s time to embrace living systems that evolve as rapidly as science itself. The future of research isn’t just open access; it’s continuous, collaborative, and intelligent.